Are you in need of a modern Kafka consumer group offset monitoring application? Look no further than Koffset!

Introduction

Koffset - Kafka Consumer Group Offset Monitoring

In early 2026, I started koffset. A project inspired by the growing frustrations with consumer group observations. Issues, such as, archived or inactive projects, scalability limitations, stale metrics, and hanging endpoints ultimately pushed me down this path.

Consumer lag metrics obtained directly from the broker are inherently stale. They tell you when consumers committed offsets, not the actual processing lag. In addition, if the metric collection is asynchronous from metric retrieval, the reported lag can be even worse, depending on the cadence of refresh and scraping operations.

So this lead to one of my major goals on writing this application, how to fetch the offsets at close to the time they will be scraped without the lag retrived and calculations being done in the same request as metric retrieval.

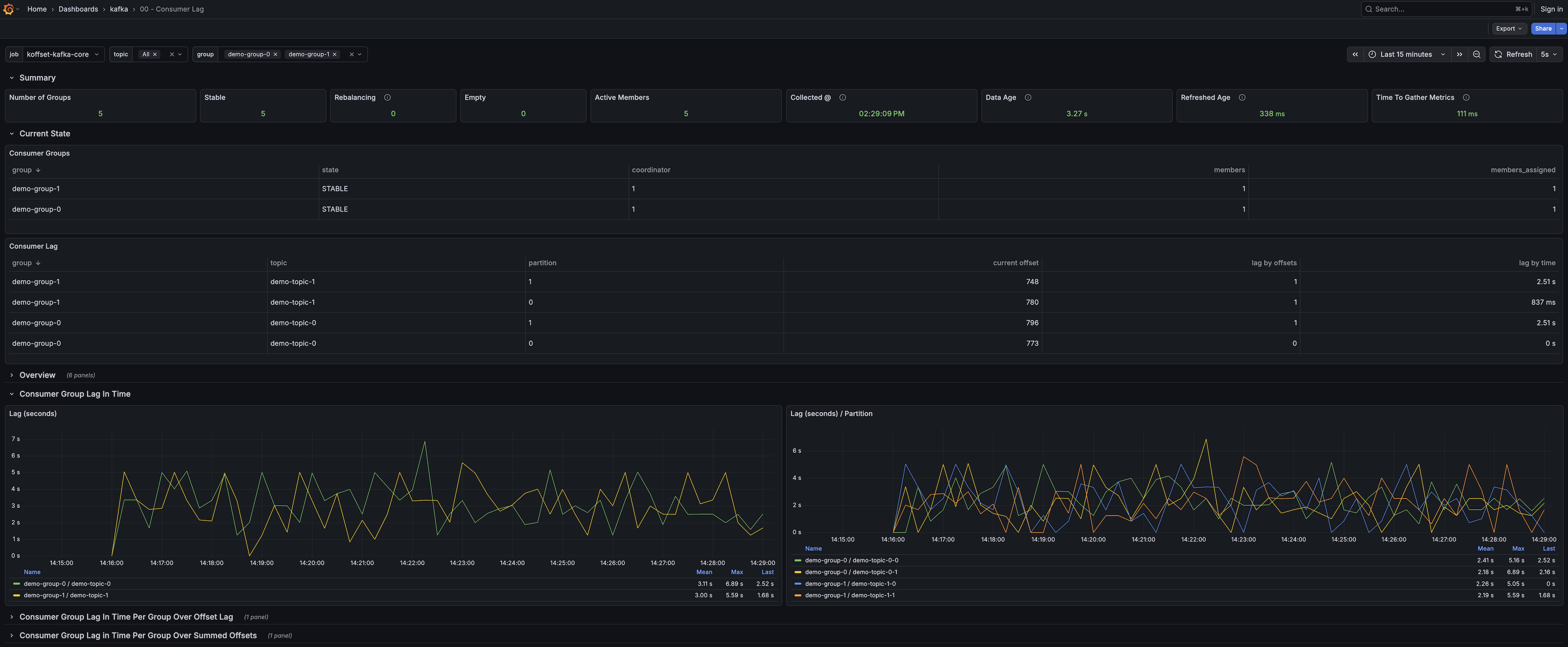

Grafana Dashboard

A sample Grafana dashboard is included with the project.

Check out the file to see the dashboard configuration. It is part of a complete demo docker-compose setup.

TL;DR

The Key points of Koffset:

- Adaptive metric collection - Automatically adjusts collection frequency to align with the scrape interval, keeping metrics as fresh as possible. Keeping collection of metrics and serving of metrics in sync is critical to avoid stale metrics.

- 100% Admin Client–based - Using a consumer client to fetch timestamp-based offset metrics provides more accuracy, but it introduces more overhead than I was willing to accept. This project does not use a Kafka consumer. I did not do benchmarks to determine if building a layer of consumers for timestamp collection would have issues scaling, I went with that assumption.

- Lean Java application - Starts in seconds with a memory footprint under 200 MB (testing and validation are still ongoing). A GraalVM build is also available, using approximately 25 MB of memory. There is no framework, just primarily kafka-client, netty, logback, and slf4j.

- Lag based in time metrics are needed - Timestamps matter when humans are trying to understand what’s actually happening in a system. This is where a lot of experimentation and effort went into this application. I’m sure these algorithms will need to be improved over time.

- Group-level metrics - Visibility into group status, members, and coordinators is critical and often overlooked. I have found some of this information lacking in other lag collection tools. When I have had a poor performing client, I want to compare the consumer groups to the broker collecting the offsets, and this will provide that.

- Staleness detection - Knowing whether an offset isn’t moving because a producer has stopped publishing versus a dead consumer is essential. Again, this is new to this lag application and over time will determine how helpful this is, or if this information can easily be determine by other metrics.

Details

The GitHub repository is available kineticedge/koffset, and I recommend reviewing the README.md for complete details.

The project is licensed under Apache 2.0, and dependencies are intentionally minimal to reduce the impact of CVEs when they arise.

Developer Details

The project provides three ways to build a container for this application:

build-docker.sh- A multi-stage build use to compile the application, and build the image. This is the same image built by the GitHub Actions workflow.build-docker-dev.sh- A single-stage build that uses the local build from your laptop, super fast way to get a new container built for development.build-docker-native.sh- A multi-stage build using GraalVM. It leverages the native configuration from anative.shrun of the application. Work is continuing to improve the native image inclusions (e.g. being able to use SASL authentication) in process.

There is also a run.sh script to run the application w/out building a disstribution. It leverages the gradle classpath and provides a similar feel to SpringBoot’s bootRun gradle option.

Next Steps of Development

- Load/Scale/Performance testing - Some preliminary test has been done, but want a more formal process to give anyone considering

koffset, the assurance it can handle their cluster. - Multiple Cluster Support - This is something I have seen in other collection tools, but I’m still deciding if it is something I want to support. I typical spin up administration tools / cluster (unless they have a front-facing UI.

- GraalVM Native Image Improvements - as mentioned above.

- Metric Validation - means to validate the derived metrics are accurate. Focusing on unit tests that verify the math is computed as designed. With future work to then validate the derived metrics are working in a way meaningful in production.

Source and Image

The best place to learn more, is the project’s README.md.

You can also pull the release image from GitHub Container Registry, ghcr.io/kineticedge/koffset-exporter:0.0.1.

docker pull ghcr.io/kineticedge/koffset-exporter:0.0.1

Contact

We work with teams in their Kafka application development as well as improving their operations. The addition of koffset is just a small example of our experience.

If you have questions, need help building event-streaming applications, or want assistance with configuring and monitoring your Kafka infrastructure, please contact us.