Setting up multiple kafka cluster configurations to explore the nuances of various tools that monitor Apache Kafka.

tl;dr

I have a new project I have created for testing Kafka tools, check it on GitHub at kineticedge/kafka-toolage. It has 4 different Kafka clusters. As I add new tools, I will be adding those configurations to this project and then write about that experience and talk about their configuration and their functionality. I spend a lot of time doing web-searches and trial and error getting configurations working; I hope I can save you some time.

Introduction

When it comes to the configuration of Apache Kafka, the integration can get complicated. The number of configurations of Apache Kafka, Kafka Connect, Confluent Schema Registry is extensive. When you add in 3rd-party tools each built by independent developer teams, it’s challenging to figure out all the configurations.

When you want to evaluate a tool, you want to focus on exploring its features and determining if those features meet your needs; you do not want to worry about setup and especially don’t want to evaluate only to find out you cannot easily integrate it with your production environment.

Future articles walk through the setup of tools against these 4 different Apache Kafka cluster configurations. This will accelerate your integration time and leave your developers focused on writing Kafka applications and minimizing time spent on infrastructure configuration.

In addition to seeing how to configure open-source tooling on clusters of each configuration, here are some additional items to see through this journey:

If you want to use a JAAS configuration file, why does the Confluent Container of Schema Registry require you to add it to both KAFKA_OPTS and SCHEMA_REGISTRY_OPTS?

Basic Auth configuration for Schema Registry and Kafka Connect are not the same, what are the differences exactly?

Why is Confluent Schema Registry configuration in Kafka-UI different from Kafka Connect and Confluent’s Java Serializer and Deserializer configurations?

Why Multiple Clusters?

The 4 clusters showcase 5 different Apache Kafka protocols and multiple options for Kafka Connect and Schema Registry.

The non-authenticated cluster is typically like an internal developer cluster or a POC cluster. It is typically the environment used when trying out Kafka for the first time, or an environment where someone is learning more about Kafka. The demonstrated here has two listeners, PLAINTEXT and SSL, but typically if SSL is desired, the PLAINTEXT listener is omitted.

A SASL authenticated cluster gives the ability to use PLAINTEXT and SSL connections. Why would anyone want to do this? Well, if you have internal IPs only accessible from in-network, you have the option for specific tools and connections to leverage 0 page-copy and improve performance of inner broker communication. You also can leverage PLAIN authentication vs. SCRAM authentication for setting up super-user access. Using SCRAM based users for admin users is a little more complicated in that you have to create those users when zookeeper is up and available (before the brokers are ever started) and leverage tooling directly against zookeeper. This gives insight into how to do that.

A SSL authenticated cluster shows how you can leverage certificates for client authentication. Building up a self-signed certificate process is not something that is part of most distributions; this project provides one to showcase how to leverage openssl to create certificates while showcasing the proper X509 extensions necessary.

Finally, an OAUTH authenticate cluster showcases how extensible a tool is for integration into your environment; and will help you identify if multiple authentication means to your cluster is necessary for leveraging a particular tool.

All certificates are all internally created by this project. A single turnkey generation from CA to broker and client certificates; including intermediate CA certificates.

The goal here is to verify that the open-source tool being considered will work with your environment. While the clusters presented here, do not handle all scenarios, it covers many of them and I hope that can minimize the path to getting them integrated with your clusters. I have spent hours on incorrect understandings of configuration, certificates that can be used for client authentication, and the unique configuration settings of many 3rd party tools.

While reading the documentation is typically the answer, juggling many documents when your interest is to verify something meets your needs before you spend all those hours getting it integrated is not ideal; let us help you with that by showcasing it here.

Years of Apache Kafka experience is behind this setup. Such setups can easily become 2-4 months of effort within your organization. This project’s goal is to help you get up and running in a fraction of that time.

The Clusters

There are way more scenarios than what I have here. What this provides is a way to test out SASL and SSL authentication, PLAINTEXT and SSL encryption, and basic-auth and no-auth authentication of the RESTful endpoints of Confluent’s Schema Registry and Kafka Connect. Also, a configuration I had yet to try, a custom OAUTH implementation. From a tooling standpoint, I also wanted to also see if a tool can handle multiple connect clusters associated with a given Kafka cluster. This is why one cluster has two separate connect clusters; to verify tools that support cluster information supports having multiple of them (with different Kafka listeners).

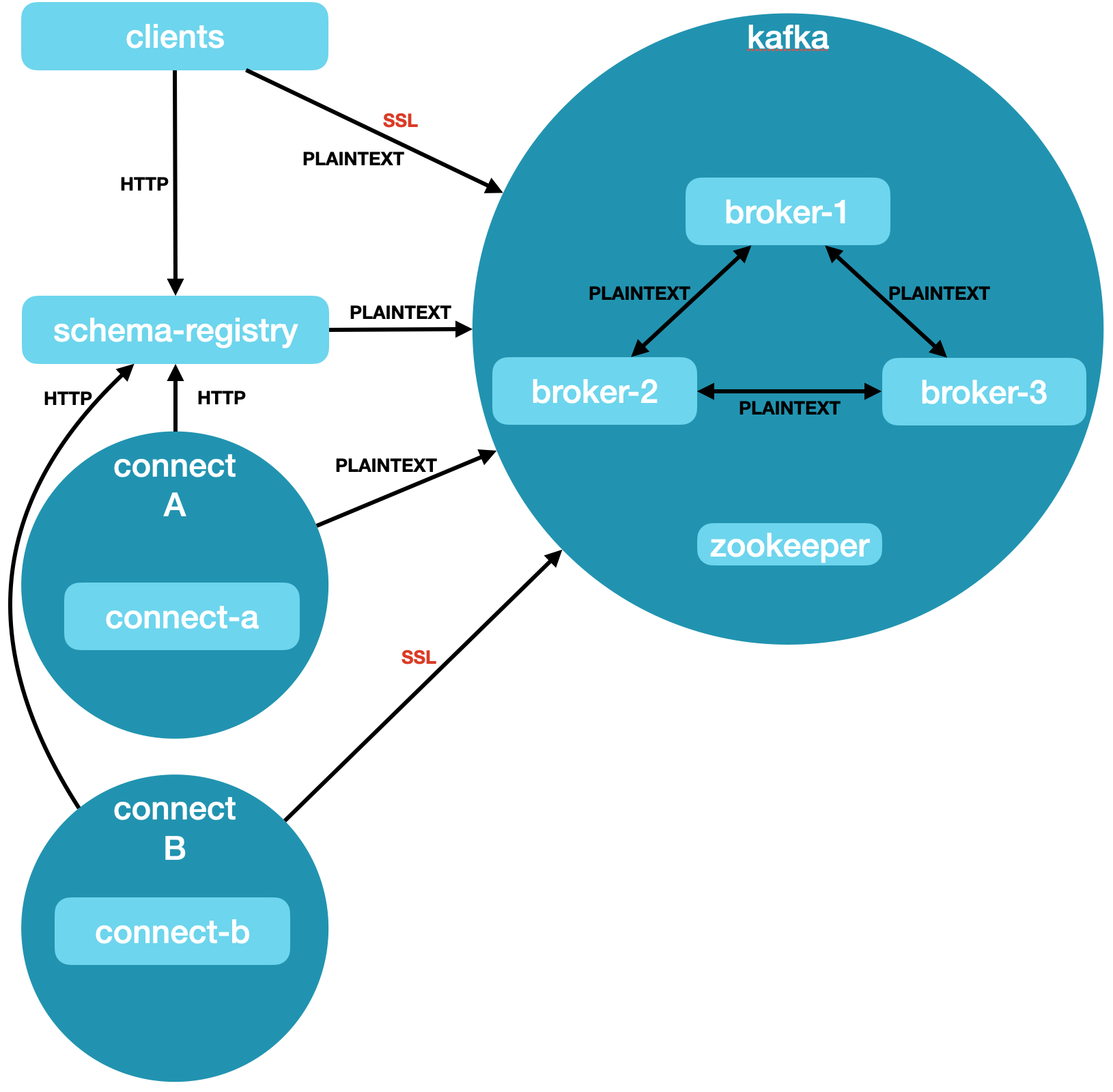

Cluster 1: Non-Authenticated Cluster

- Connectivity

- Kafka Brokers

- PLAINTEXT protocol on port 9092

- SSL protocol on port 9093

- Schema Registry

- HTTP

- Kafka Connect

- HTTP

- Kafka Brokers

- Configuration

- 1 Zookeeper

- 3 Brokers

- Inner Broker Protocol PLAINTEXT

- 1 Schema Registry

- Broker Protocol PLAINTEXT

- Kafka Connect Cluster A

- Broker Protocol PLAINTEXT

- Kafka Connect Cluster B

- Broker Protocol SSL

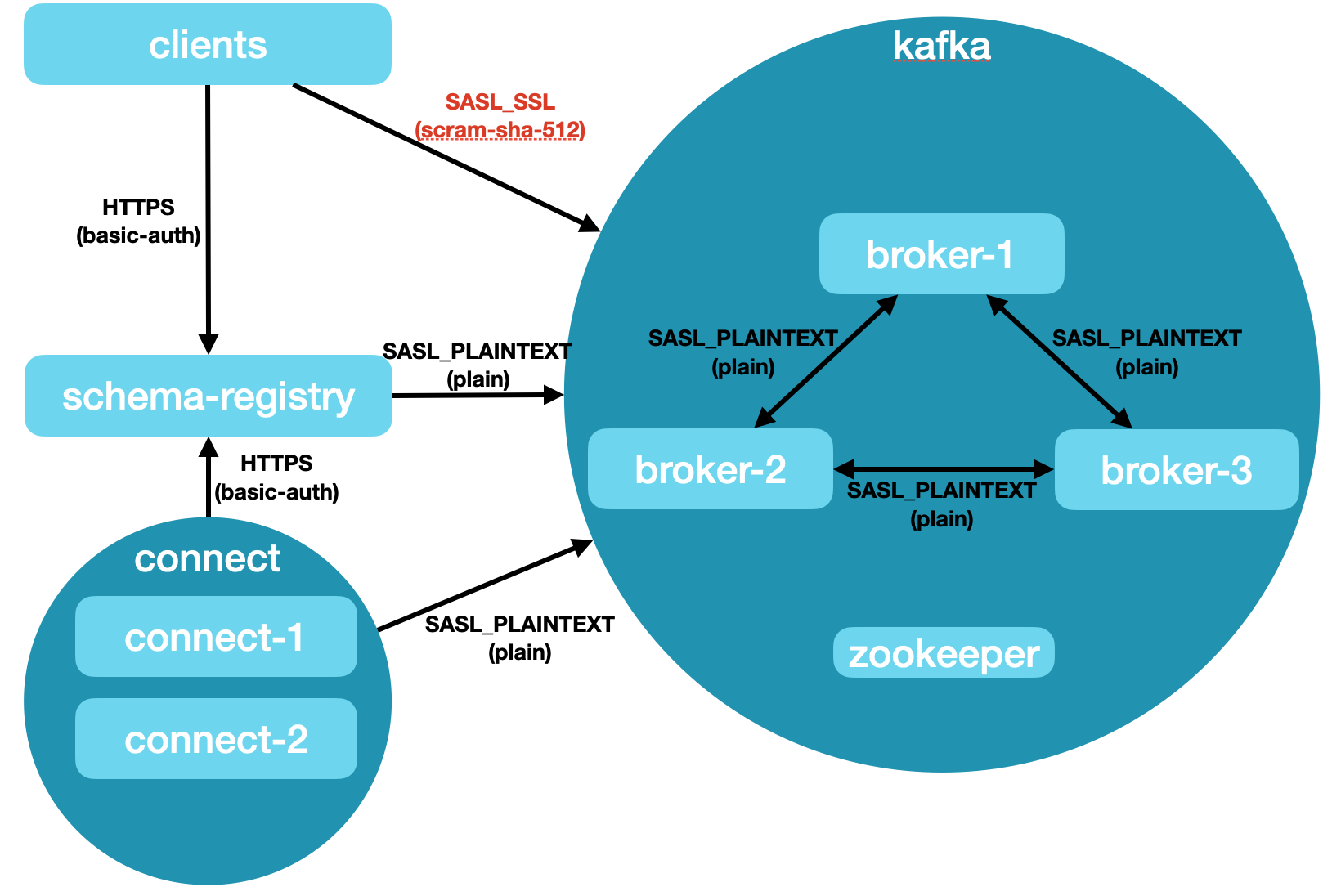

Cluster 2: SASL Authenticated Cluster

- Connectivity

- Kafka Brokers

- SASL_PLAINTEXT protocol on port 9092 (scram-sha-512 & plain)

- SASL_SSL protocol on port 9093 (scram-sha-512)

- Schema Registry

- HTTPS with Basic Authentication

- Kafka Connect

- HTTPS with Basic Authentication

- Kafka Brokers

- Configuration

- 1 Zookeeper

- 3 Brokers

- Inner Broker Protocol SASL_PLAINTEXT (plain)

- Schema Registry

- Broker Protocol SASL_PLAINTEXT (plain)

- Kafka Connect

- Two Workers

- Broker Protocol SASL_PLAINTEXT (plain)

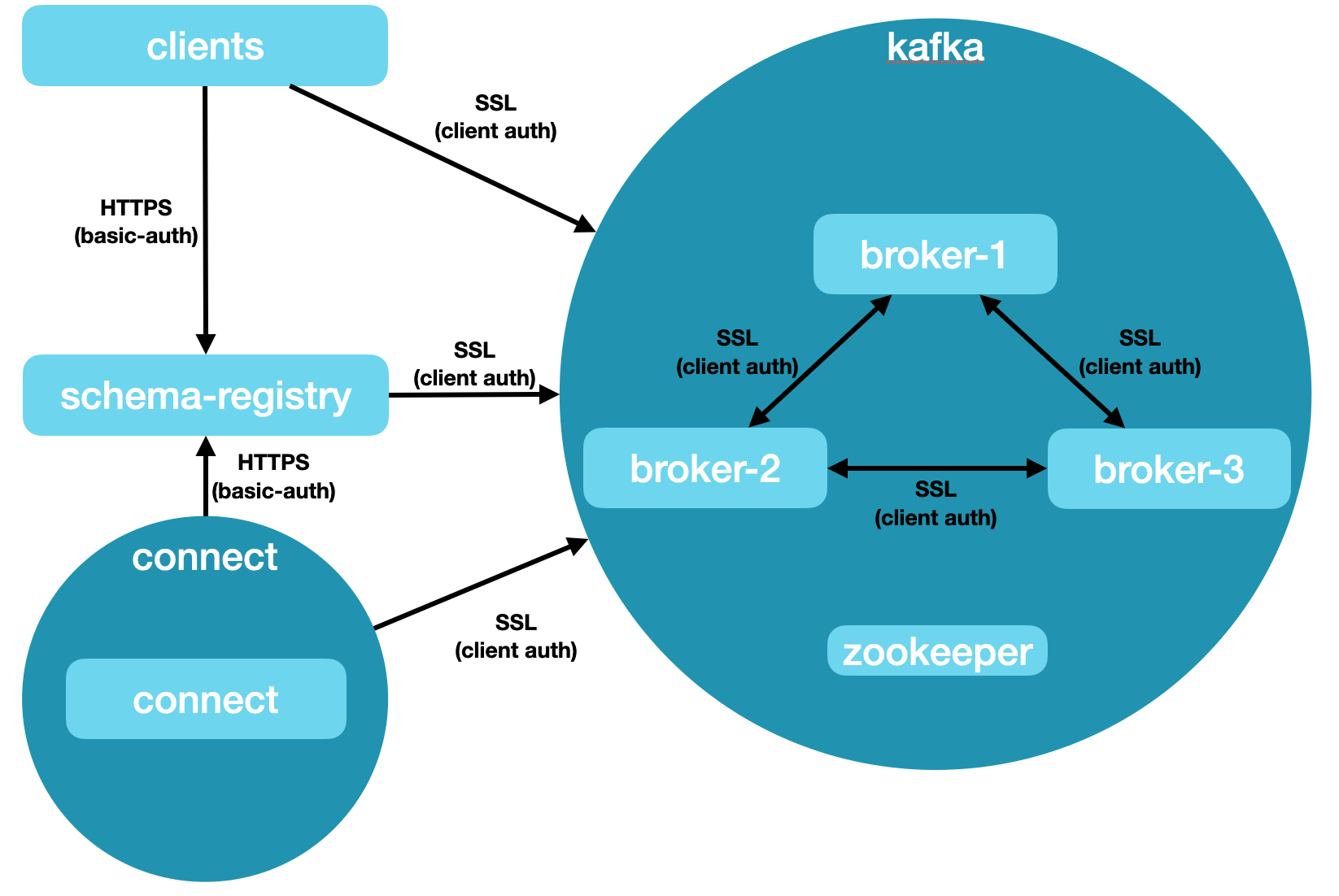

Cluster 3: SSL Authenticated Cluster

- Connectivity

- Kafka Brokers

- SSL protocol on port 9093 (SSL Client Authentication required)

- Schema Registry

- HTTPS with Basic Authentication

- Kafka Connect

- HTTPS with Basic Authentication

- Kafka Brokers

- Configuration

- 1 Zookeeper

- 3 Brokers

- Inner Broker Protocol SSL

- Schema Registry

- Broker Protocol SSL

- Kafka Connect

- Broker Protocol SSL

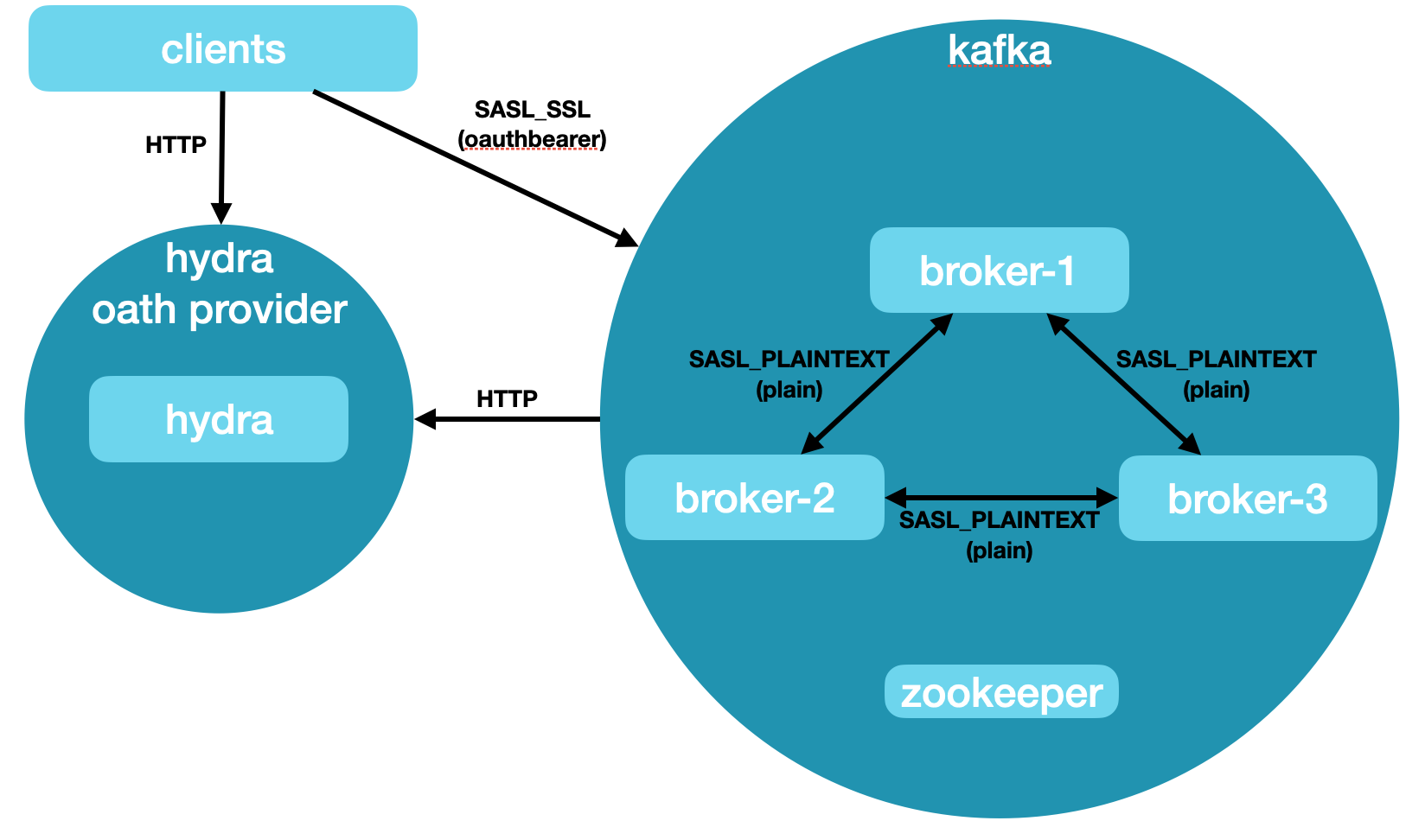

Cluster 4: OAUTH Authenticated Cluster

- Connectivity

- Kafka Brokers

- SASL_PLAINTEXT protocol on port 9092 (plain)

- SASL_SSL protocol on port 9093 (oauthbearer)

- Kafka Brokers

- Configuration

- 1 Zookeeper

- 3 Brokers

- Inner Broker Protocol SASL_PLAINTEXT (plain)

- No Schema Registry

- No Connect Cluster

- Open Source Hydra OAuth Server

- Image extended to pre-configure database and users created.

- OAuth Client java library

Demonstration Project

The above clusters and configurations are freely available in an Apache 2.0 Licensed project on GitHub. This licensing does not apply to the components being evaluated, and you need to validate and understand their licensing before bringing them into your organization.

Project Notes

- If clusters fail to start, the first thing to check is if you created the certificates. If certificates don’t exist the brokers will fail to start and the dependencies on them will cause them to hang.

- Because of having multiple clusters at once, port mapping is not done. Use the

jumphostcontainer to gain access to kafka command line tools to the clusters. - This project is being developed on a Mac M1 Max with 64GB, with 32GB dedicated to Docker. On a machine with less resources, consider testing against each cluster one at a time.

- As I add in new tools, I expect I will have to go back and make some changes. Hopefully not, but with all nuances between them and uncovered copy and paste issues; I expect I will find a few more.

Classifications

Tool classifications, for the sake of these reviews, are monitoring, observation, and administration.

Example operations for each:

- Monitoring

- Bytes in and out on a given topic

- Disk utilization of brokers

- Number of partitions on a broker

- Connector status and health

- Observation

- Inspect Messages on a topic

- Inspect a Schema

- Consumer Lag

- Administration (Management)

- Move partitions of a topic

- Change the retention time of a topic

- Adjust offsets of a consumer group

- Pause and resume a connector

- Delete a schema

Tools can support multiple operations, but also know that tools are developed with a specific feature set in mind. I expect this list to change as I learn more about each tool as I attempt to integrate them into these clusters.

Tools

These are current tools planned to be evaluated against these clusters. When the review is completed, a link will be made available from here to that review. The review is more than just an overview of the features and how they work and integrate with Apache Kafka, but a source to use to see each cluster integration together, hoping at least one of these configurations is close to your setup making it quicker for integration and evaluation.

- AKQH

- CMAK

- Grafana

- Kafdrop

- KafkaUI

- kowl

- Lensesio

The order listed does not indicate the order of review.

Coming Soon

First I will explore Grafana, which leveraging Prometheus and the Prometheus JMX Exporter. Then I will explore Kafka-UI.

Questions You May have

Why is a tool missing from the list?

This list is currently based on tools that are open-source with free-to-use licensing. Please, feel free to suggest additional tools for evaluation. If the tool is commercial, a free developer license is required for my review. That license should be renewable, as I hope to reevaluate when major changes are released to Apache Kafka (e.g. KRaft) and to the respective tool.

Will you succeed?

I do not know yet the configuration of each tool. I may “give up” saying it is not possible only learn later I just didn’t know how to do it. I will be more than happy to update the process, admit my mistake, and make it easier for others to integrate tools with a given setup.

When will the results of each tool be posted?

My timeline will not be consistent. I have other areas of interest to write about so I expect other articles to be intermixed with this process. Also, I expect there to be a fair amount of work on my part to understand and test out things – well before I even write about it. I can say I have two integrations nearly completed; but I still have to write them.

What about the all the interesting stuff you learned along the way?

The technology questions I have uncovered during this process (like the 3 I mentioned above) will be covered throughout the set of articles.

Reach out

Please contact us if you have ideas, suggestions, want clarification, or just want to talk Apache Kafka administration.