Introduction

Are you interested in using Grafana to monitor an Apache Kafka Cluster? Are you concerned you can integrate it with a specific cluster configurations? Using Grafana requires a fair amount of infrastructure to be established. While there are plenty of examples out there, you can spend a lot of time adjusting dashboards to get a desired setup.

Takeaways

- A project that provides a single deployed Grafana to monitor multiple Apache Kafka Clusters and Multiple Kafka Connect within an Apache Cluster.

- A walk-through of the configuration, so you have an end-to-end understanding of what it will take to leverage Grafana within your organization.

- An overview and review of Grafana as an Apache Kafka tool.

TL;dr;

- JMX metrics are a great way to see the health of an Apache Kafka Cluster.

- Grafana Dashboards are a great visualization of Kafka components, including applications built with the kafka-clients or kafka-streams Java libraries.

- A complete example of using Grafana against 4 differently configured clusters is available in kafka-toolage repository.

- The coupling of JMX Prometheus Exporter configuration, Prometheus scraping, and Grafana queries makes it harder to combine dashboards from others.

- The dashboards here are based on my experience with a lot of inspiration also from Confluent’s GitHub repository (Apache 2.0 License).

Important Considerations

- Kafka Monitoring by Grafana is not a turnkey solution; expect investment time from your organization.

- Grafana’s license changed to AGPLv3, from Apache License 2.0, in April 2021. Review it and make sure this works for your organization.

- Leveraging JMX Prometheus Exporter exposes broker metrics to a RESTful endpoint; enable security if that endpoint is accessible outside the kafka tools network.

- Scraping JMX metrics through the endpoint can impact performance; tune accordingly by only exporting specific metrics and reducing the frequency Prometheus scrapes them.

Infrastructure Needed

- JMX Prometheus Exporter Agent attached to every monitored JVM

- JMX Prometheus Exporter Configuration for each service

- Zookeeper

- Brokers

- Connect

- Schema Registry

- Clients (if desired)

- Prometheus

- Configuration for each JVM to pull in the data from the agents

- Grafana

- Prometheus Integration

- Dashboards

- Variables to allow for supporting multiple clusters

This is a rather extensive list and can feel overwhelming. Take it one step at a time and it will all fall into place.

Software

This is the software, and versions used at the time of this writing.

| Component | Container | License | Latest Version |

|---|---|---|---|

| Prometheus JMX Exporter | N/A | Apache License 2.0 | 0.16.1 1 (2021-07-14) |

| Prometheus | Docker | Apache License 2.0 | 2.38.0 (2022-08-16) |

| Grafana | Docker | GNU AGP License v3.0 | 9.1.4 (2022-09-09) |

1 The latest version is 0.17.0 (2022-05-23), but it is not working because of a dependency issue resulting in an unknown field value. While I did some research to resolve the issue, I see no technical or security reason to be on 0.17.0.

noauth-broker-1 | Exception in thread "main" java.lang.reflect.InvocationTargetException

noauth-broker-1 | ...

noauth-broker-1 | at java.instrument/sun.instrument.InstrumentationImpl.loadClassAndCallPremain(InstrumentationImpl.java:525)

noauth-broker-1 | Caused by: java.lang.NoSuchFieldError: UNKNOWN

noauth-broker-1 | at io.prometheus.jmx.JmxCollector$Rule.<init>(JmxCollector.java:57)

The Challenges

- The Grafana project does not provide Kafka dashboards. There are various ones out there, and their configuration is coupled to the configuration of JMX Prometheus Exporter as well as how that is integrated into Prometheus.

- Typically, clients use Grafana for other dashboards and the ability to customize the experience is desired, but that typically means the organization will take on the maintenance and configuration of the dashboards; this takes time.

- There are metrics that cannot be obtained from JMX that are desired to be part of Grafana dashboards; this disconnect can lead to some challenges.

- To monitor clients (producers, consumers, and stream applications) requires exporting and scraping their metrics. While the repository provides Kafka client dashboards, it is not reviewed here.

- Also, JMX monitoring of clients is only available in the Java client. JMX is a Java monitoring API and not implemented by

librdkafkaor other non-Java client libraries.

Setup

Adding Grafana to your Apache Kafka tooling has many steps. Some of these pieces require working with your organization to ensure you comply with any security considerations. While exposing JMX Metrics is a read-only operation, that doesn’t mean security is not an issue.

Since setup is an important part in considering a tool; we will walk through various parts of the setup process. Leverage the example repository for complete details.

JMX Prometheus Exporter

If you are new to JMX Metrics and the JMX Prometheus Exporter, start with a simple configuration to expose all metrics. Eventually, limit the metrics to only those needed to power the dashboards.

Configuration

This configuration exposes all.

config.yml – everything

lowercaseOutputName: true

rules:

- pattern: .*

Integration

Add the JVM agent, for Apache Kafka components. For Apache Kafka components, add this to your KAFKA_OPTS environment variable.

-javaagent:/jmx_prometheus_javaagent.jar=7071:/config.yml

Validation

Test is simple.

% curl http://localhost:7071

Adjustment

Limit the configuration to limit only the metrics you need; replacing .* with specific patterns.

This reduces the burden on the services for providing these metrics.

- pattern: kafka.server<type=BrokerTopicMetrics, name=BytesInPerSec, topic=(.+)><>OneMinuteRate

config.yml – specific rules

lowercaseOutputName: true

rules:

- pattern: kafka.server<type=BrokerTopicMetrics, name=BytesInPerSec, topic=(.+)><>OneMinuteRate

- pattern: kafka.network<type=RequestMetrics, name=(.+), request=(.+)><>999thPercentile

- pattern: kafka.network<type=RequestMetrics, name=(.+), request=(.+)><>99thPercentile

- pattern: kafka.network<type=RequestMetrics, name=(.+), request=(.+)><>95thPercentile

Some JMX metrics cannot be scraped w/out mapping parameters to labels. Informational metrics, such as version strings, would need a mapping to expose the attribute/value as labels and provide a dummy value (Grafana expects numerical value metrics). This is an example of accessing the broker version from an app-info metric.

- pattern: "kafka.server<type=app-info, id=(.+)><>(.*): (.*)"

name: kafka_server_app_info_$2

labels:

kafka_broker: "$1"

kafka_version: "$3"

value: 1

It is my recommendation to only rewrite the patterns when absolutely necessary.

Prometheus

Prometheus is a time-series database used to capture the scraped metrics. It has to be configured to scrape the data and store it. Getting Prometheus’ configuration right is the key to supporting multiple types of clusters and multiple instances. For this project, a cluster_type label is added to separate out statistics from brokers, connect, and schema-registry. A cluster_id to identify each cluster.

Configuration

To pull the JMX Prometheus Exporter endpoint into Prometheus, you need to set up a job as a scraped configuration. Prometheus provides many service-discovery mechanisms. The means your use depends on the infrastructure of your organization. For this setup static_configs is used. If I were to add in client metrics, I would use file_sd_configs for demonstration and kubernetes_sd_config for applications deployed within Kubernetes.

Here is the configuration for the noauth brokers.

The additional labels make it easier to separate metrics within Grafana.

The relabel_configs allows for the removal of the port from the target’s name.

prometheus.yml

global:

scrape_interval: 15s

scrape_configs:

- job_name: noauth-kafka

static_configs:

- targets:

- noauth-broker-1:7071

- noauth-broker-2:7071

- noauth-broker-3:7071

labels:

cluster_type: "kafka"

cluster_id: "noauth"

relabel_configs:

- source_labels: [__address__]

regex: '(.*):(.*)'

target_label: instance

replacement: '$1'

Validation

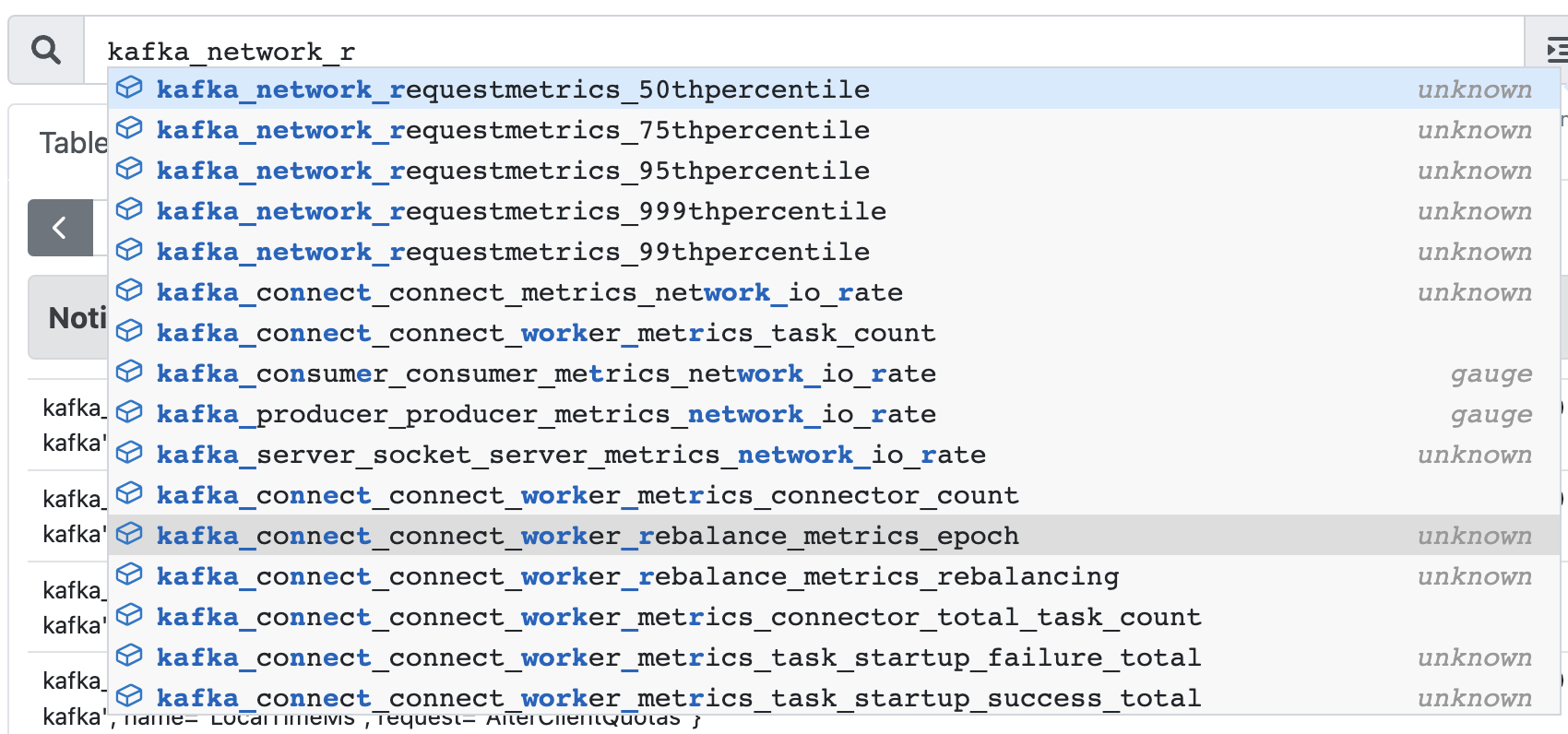

Prometheus provides a nice web interface for inspecting its database. With Prometheus’ built-in autocomplete, you can start typing the metrics and see current metrics that match a metric.

This Prometheus UI is the best way to validate JMX metrics gathering and configuration. While checking the JMX Prometheus Exporter works, it will not add the additional labels added through Prometheus, so check out the Prometheus UI. There are additional inspections available to ensure your jobs are configured as expected.

NOTE

Storage is based on retention and sampling.

The default retention is 15 days.

To change the retention time, use the command line parameter --storage.tsdb.retention.time.

needed_disk_space = retention_time_seconds * ingested_samples_per_second * bytes_per_sample

Grafana

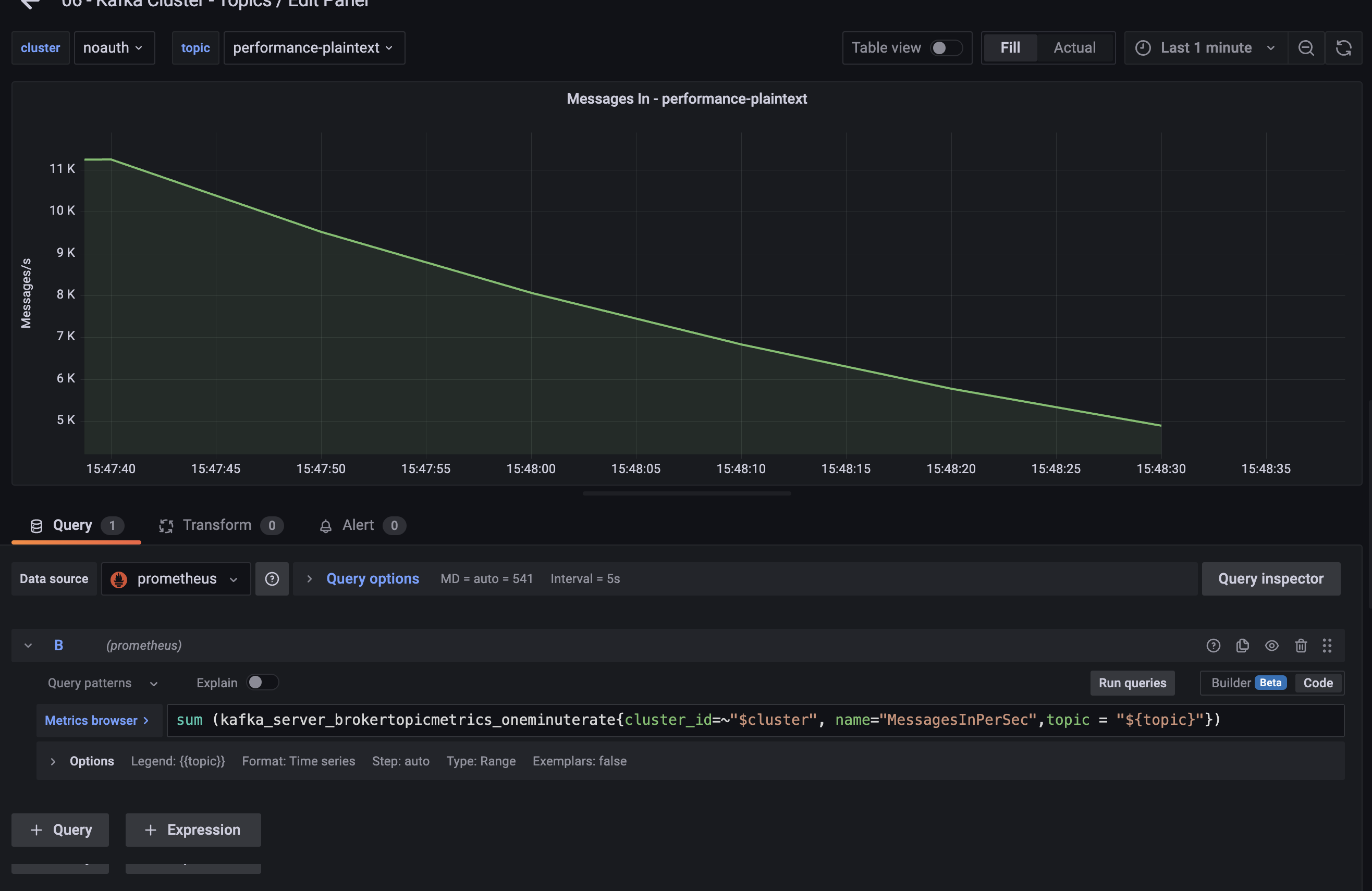

The setup of Grafana is not simple, but if the pieces are in place and validated, then it gets easier. Assuming you have Prometheus integrated and variables configured, you can quickly add in a query. If you are like me, however, you will experiment with various panels and tweak their display and size.

Configuration

The heart of the configuration is writing a query that aligns with what you found in Prometheus.

Use the Prometheus UI to ensure you have it correct.

Grafana provides documentation on writing queries.

Understanding with and without is an important step to writing queries that separate or combine measurements.

simple query

sum (

kafka_server_brokertopicmetrics_oneminuterate {

name="MessagesInPerSec",

cluster_id =~ "$cluster",

topic = "${topic}",

cluster_type="kafka"

}

)

more advanced query

sum without(instance,job) (

kafka_server_brokertopicmetrics_oneminuterate {

name="MessagesInPerSec",

topic!~"(_)+confluent.+",

topic!="",

cluster_id=~"${cluster}",

cluster_type="kafka"

}

)

Grafana queries depend on the configuration of JMX Prometheus Exporter and Prometheus.

Showcase

I am quite happy with the Grafana dashboards. The review is not about them, but about the success in using Grafana to access and display the metrics across the multiple cluster configurations.

4 Clusters

The clusters used for analysis are from the first article in this series, Apache Kafka Monitoring and Management.

| cluster | listener type - 9092 | listener type - 9093 | schema registry | kafka connect |

|---|---|---|---|---|

| noauth | plaintext | ssl | http | http |

| sasl | sasl_plaintext (plain) | sasl_ssl (scram) | https / basic auth | https / basic auth |

| ssl | - | ssl | https / basic auth | https / basic auth |

| oauth | sasl_plaintext (plain) | sasl_ssl (oauth) | n/a | n/a |

Cluster Health

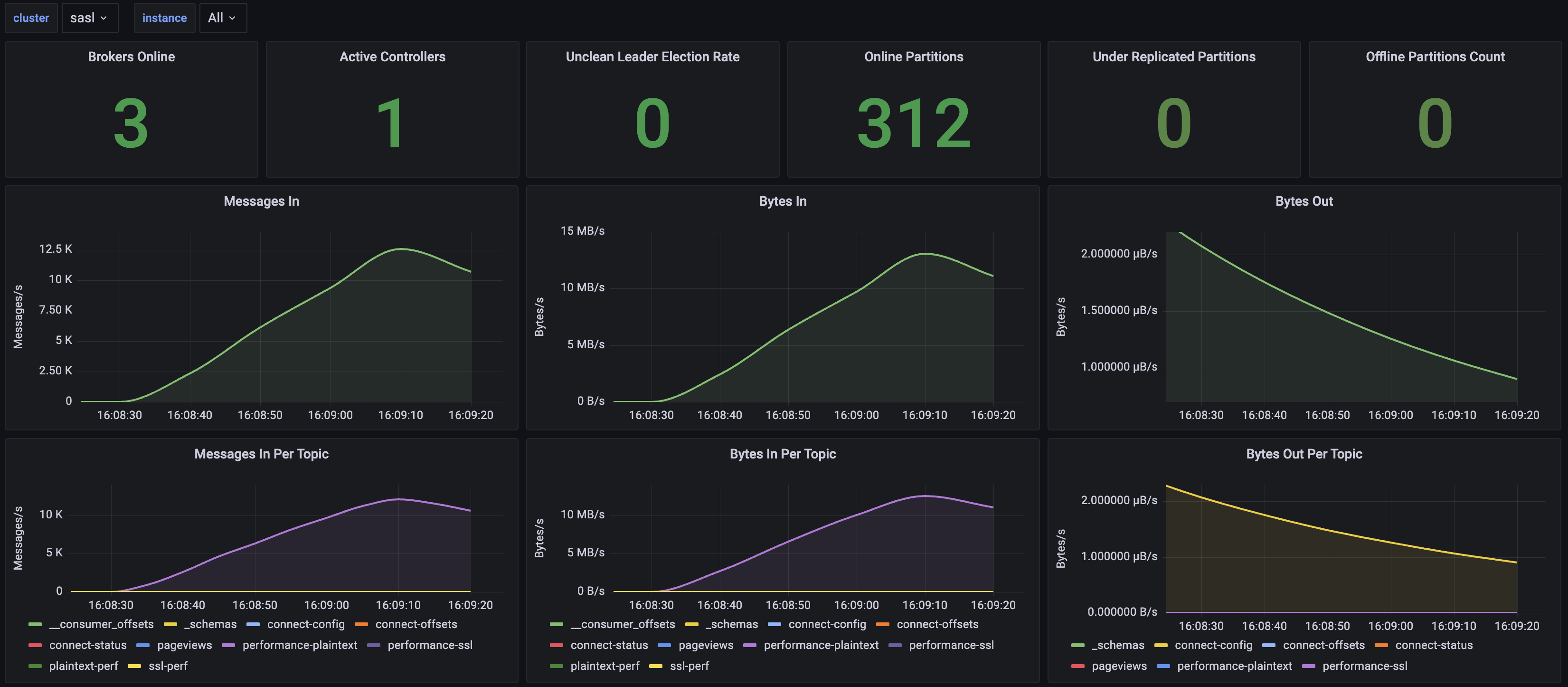

A single dashboard can be stood up to give the health of the cluster.

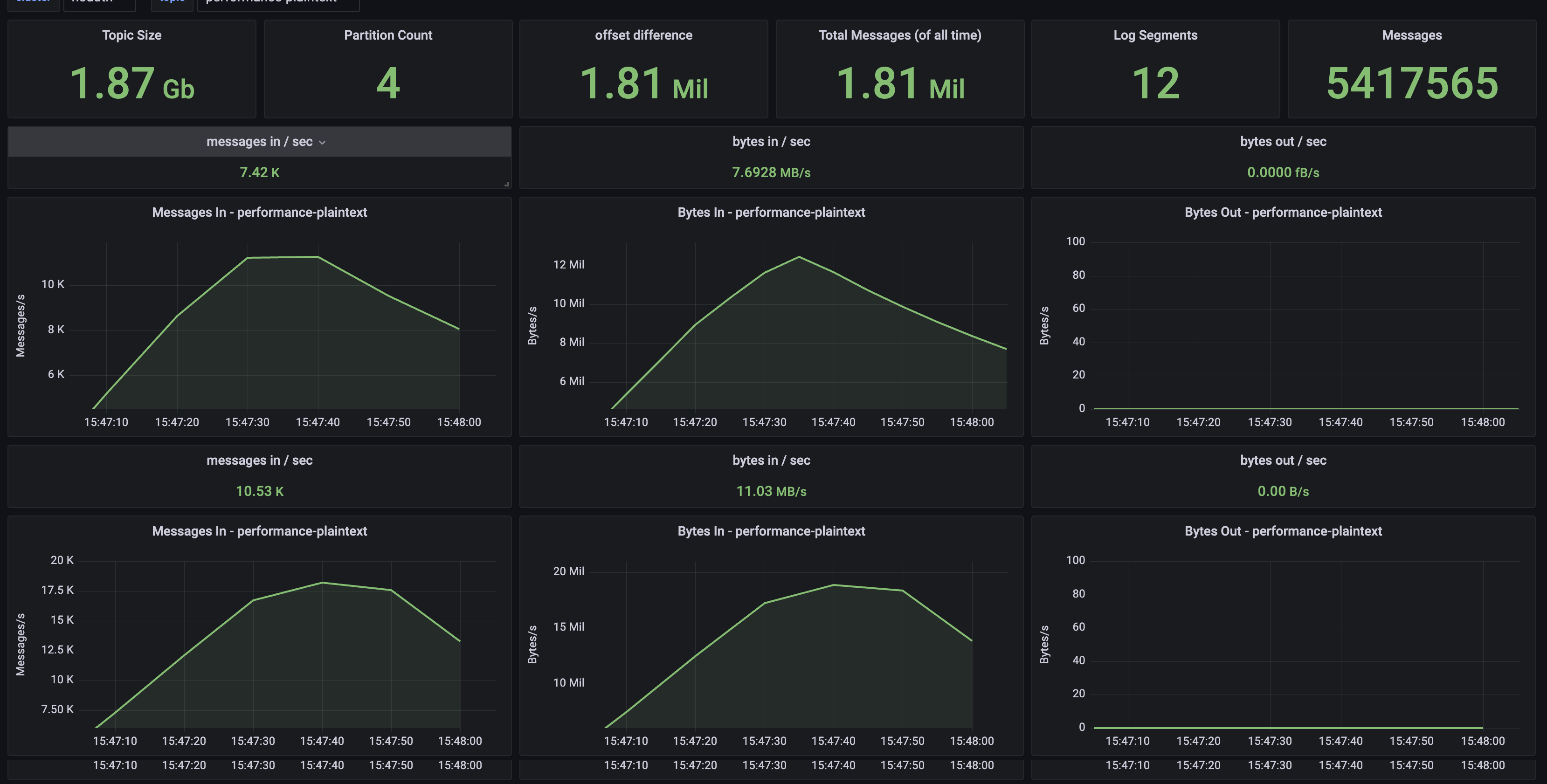

Topic

A single dashboard can provide drill-down into the messages and bytes in and bytes out.

Connections (Listeners)

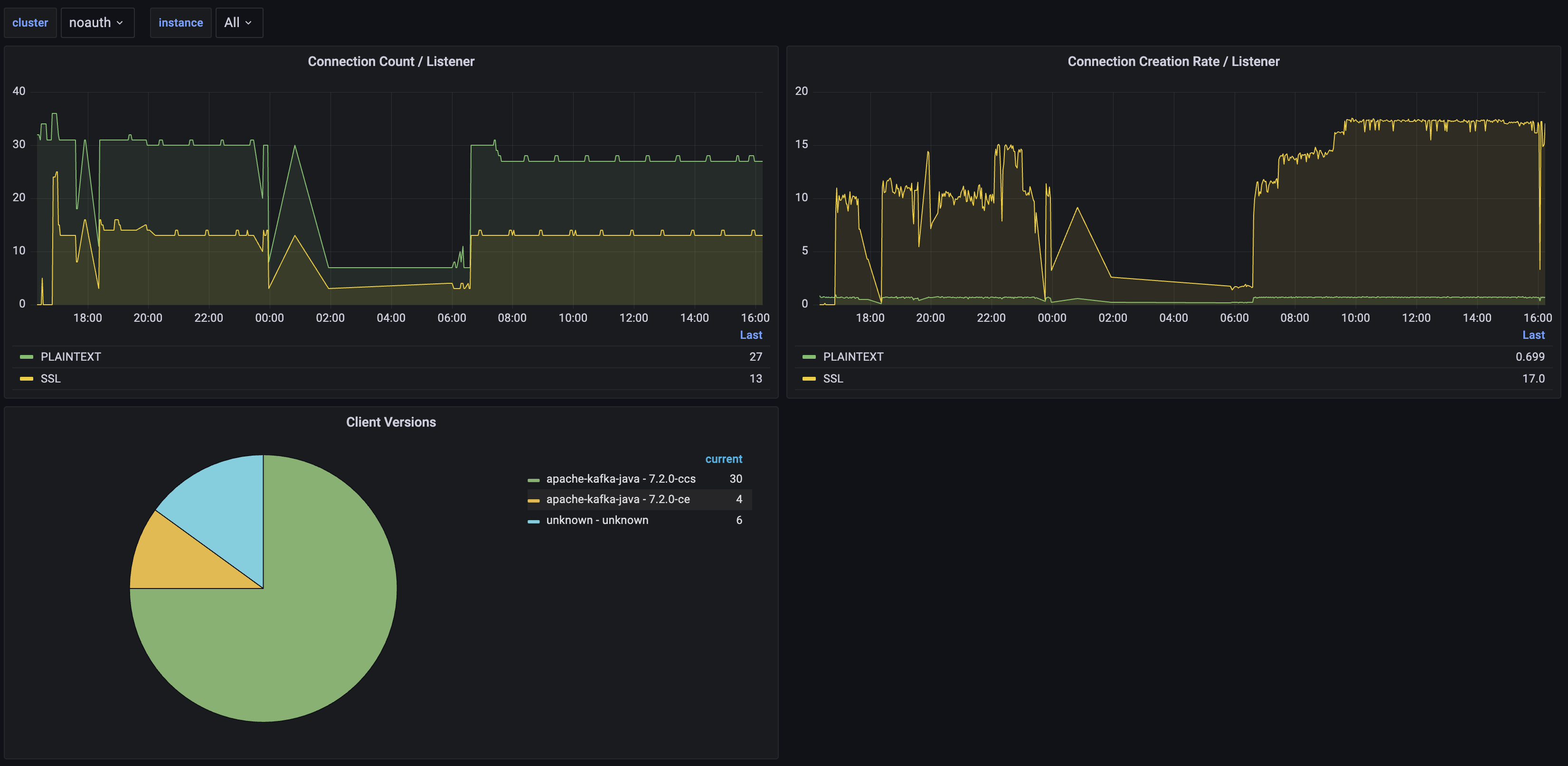

If a cluster has multiple listeners, the information on each listener can be tracked.

Kafka Connect

A single dashboard for each Kafka Connect cluster, even if there are multiple clusters for a single Kafka cluster.

Confluent Schema Registry

Metrics of each Schema Registry are also available.

If you want to see more, I recommend checking out the demonstration project kafka-toolage. All shown dashboards are available for your evaluation.

Review

Grafana falls primarily under the Monitoring classification as stated in the setup articles.

Classification

I have classified tools in three areas monitoring, observation, and administration. Review of tools is based on their purpose/design. Grafana is a monitoring tool, and the review is based on the supported functionality.

| functionality | |

|---|---|

| monitoring | ✔ |

| observation | 𐄂 |

| administration | 𐄂 |

Connectivity

100% successful in connecting to every deployed component.

| Cluster | Service | Protocol 1 | result |

|---|---|---|---|

| noauth | broker | JMX (HTTP) | ✔ |

| noauth | schema-registry | JMX (HTTP) | ✔ |

| noauth | connect (a) | JMX (HTTP) | ✔ |

| noauth | connect (b) | JMX (HTTP) | ✔ |

| ssl | broker | JMX (HTTP) | ✔ |

| ssl | schema-registry | JMX (HTTP) | ✔ |

| ssl | connect | JMX (HTTP) | ✔ |

| sasl | broker | JMX (HTTP) | ✔ |

| sasl | schema-registry | JMX (HTTP) | ✔ |

| sasl | connect | JMX (HTTP) | ✔ |

| oauth | broker | JMX (HTTP) | ✔ |

1 while every cluster other than ssl has 2 kafka protocols for monitoring tools that leverage JMX distinction between the two is not applicable.

Deployments Needed

Only a single deployment of Grafana is needed to observe all Kafka Clusters and their respective Connect Clusters and Schema Registries.

Ratings

These ratings are subjective. I find rating Grafana to be difficult, in that I have worked with it for 4 years, constantly changing my queries and dashboards. It is challenging for me to fully abstract out this experience in reviewing against the 4 different clusters. What I discovered, the dashboards I had already built had errors and were incomplete; they required modifications to support multiple instances.

Rating Classification: 1 difficult/rigid — 10 easy/customizable

| classification | rating | summmary |

|---|---|---|

| setup | 1 1 | coupling of dashboards to jmx exporter makes it difficult to borrow from other provided dashboards |

| success | 10 | was able to access all metrics for all components in all clusters |

| customizable | 9 | very customizable, which ia great but also means more time to setup and use |

| completeness | 8 | full access to all the metrics, provided all clusters expose their metrics the same way |

| integration | 8 | free to write your own queries, dashboads, and variables |

| ease-of-use | 8 | once dashboards are built, easy to use |

1 When I started this review, my dashboards were already built. However, my setting up of variables and the ability to move between Apache Kafka Clusters and Kafka Connect clusters were failing – I had to modify the dashboards to support multiple Clusters. While successful, it took additional time.

Conclusion

Monitoring is a vital component of your Kafka Cluster and Apache Kafka Applications. For open-source options Grafana is the standard. It is unclear to me in how their licensing change impacts enterprise organizations and their use and adoption of Grafana.

While Grafana is a big effort to set up and configure, once configured, it is quite stable and provides a rich set of information to provide your development and operational teams.

Because Grafana is leveraging the Metrics and having them made available via JMX, the use of Grafana is limited to self-managed installations.

I hope the kafka-toolage can give you inspiration for using Grafana for your Kafka administration.

Reach Out

Please contact us if you have ideas, suggestions, want clarification, or just want to talk Apache Kafka administration.