Are you interested in setting up Kafka without Zookeeper and with a dedicated controller quorum? Here are the steps and reference project showcasing how to do this using the Confluent community-licensed container images. A Grafana dashboard to observe the new metrics is also provided.

Update

The cp-kafka images have been improved to create the kafka-storage as part of the startup process. The scripts build for 3.3 are not

needed for 3.5. The article is updated to reflect these improvements, with the legacy steps are kept in case someone

has a new to use an older version of Apache Kafka.

Introduction

Kafka Raft (KRaft) brings the consensus protocol into the Controller Plane of Apache Kafka from Zookeeper. With this change, the role of a Kafka instance can be that of a controller, broker, or both. Getting this configuration stood up requires some tweaks to the confluent cp-kafka image.

If you want a deeper understanding of the design and implementation details, check out Jun Rao’s course on Kafka Internals. Specifically, the control-plane section.

Configuration

There are many configuration parameters with Apache Kafka; highlighted here are the ones necessary to build out the cluster with KRaft.

The property node.id replaces broker.id.

Be sure that all identifiers in the cluster are unique across brokers and controllers.

A node can be both a broker or a controller.

Set to broker,controller to enable both the controller and data planes on a node.

List out the listener names used for the controller.

This indicates to a node the listener to use for that communication.

While the property is a list, just like advertised.listeners, the first one is what is used for controller communication.

A comma delimited list of voters in the control plane, where a controller is noted as: node_id@hostname:port.

example:

KAFKA_CONTROLLER_QUORUM_VOTERS: 10@controller-0:9093,11@controller-1:9093,12@controller-2:9093

Additional Configuration

There are other tuning parameters for the controller plan, see the documentation for details.

Lesson Learned

Do not remove cluster settings from the dedicated controllers, since a controller is the node that performs administration operations, such as creating a topic.

Incorrectly removing these from the controllers caused topics to be created without Apache Kafka defaults.

KAFKA_DEFAULT_REPLICATION_FACTOR: 3

KAFKA_NUM_PARTITIONS: 4

Storage

Another change to setting up Apache Kafka with Raft is the storage. The storage on each node must be configured, before starting the JVM.

This can be done with a kafka-storage command provided as part of Apache Kafka.

Generate a unique UUID for the cluster, you can use

kafka-storage random-uuidor another means.Before starting the cluster, format the metadata storage with

kafka-storage format.

kafka-storage format -t $KAFKA_CLUSTER_ID -c <server.properties>

Seeing It In Action

If you are interested in seeing all this in action, check out the kafka-raft docker-compose setup in the dev-local project. It is a fully working examples with 3 controllers and 4 brokers.

Metrics

If you are going to deploy Kafka with Raft to production, having visibility to metrics is important. Adding that visibility is just as important (if not more so) than having dedicated controllers. The key to dashboards, ensure that they report correctly on data and control planes are separated or combined.

Grafana

A Grafana Dashboard is a multi-step process of extracting the metrics and storing them in a time-series database (e.g. Prometheus) and then visualizing those collected metrics in a Grafana Dashboard.

JMX Prometheus Exporter

The KRaft Monitor metrics are defined in the documentation, with an MBean name, such as kafka.server:type=raft-metrics,name=current-state.

Using a JMX client, such as jmxterm, shows the MBean is name is just kafka.server:type=raft-metrics with each metric an attribute in that bean.

This is different from the current documentation.

If you deploy Java applications with JMX Metrics in containers, I highly recommend jmxterm.

java -jar jmxterm.jar

In the cp containers, the java process is process 1, but use the command jvms to see all available processes; and verify the JVM’s process-id is indeed 1.

$>open 1

Show all the MBeans in the JVM, with beans.

$>beans

...

kafka.server:type=raft-metrics

...

Select a bean and use info to explore the attributes on a bean and get to get the current value of an attribute.

$>bean kafka.server:type=raft-metrics

#bean is set to kafka.server:type=raft-metrics

$>info

# attributes

%0 - append-records-rate (double, r)

%1 - commit-latency-avg (double, r)

%2 - commit-latency-max (double, r)

%3 - current-epoch (double, r)

%4 - current-leader (double, r)

%5 - current-state (double, r)

%6 - current-vote (double, r)

%7 - election-latency-avg (double, r)

%8 - election-latency-max (double, r)

%9 - fetch-records-rate (double, r)

%10 - high-watermark (double, r)

%11 - log-end-epoch (double, r)

%12 - log-end-offset (double, r)

%13 - number-unknown-voter-connections (double, r)

%14 - poll-idle-ratio-avg (double, r)

$>get current-leader

current-leader = 10.0;

Leveraging the above, the following properly exposes these from JMX Prometheus Exporter.

Since current-state attribute value is a string, its value needs to be associated with a label to capture it in Prometheus.

rules:

- pattern: "kafka.server<type=raft-metrics><>(current-state): (.+)"

name: kafka_server_raft_metrics

labels:

name: $1

state: $2

value: 1

- pattern: "kafka.server<type=raft-metrics><>(.+): (.+)"

name: kafka_server_raft_metrics

labels:

name: $1

Grafana Dashboard

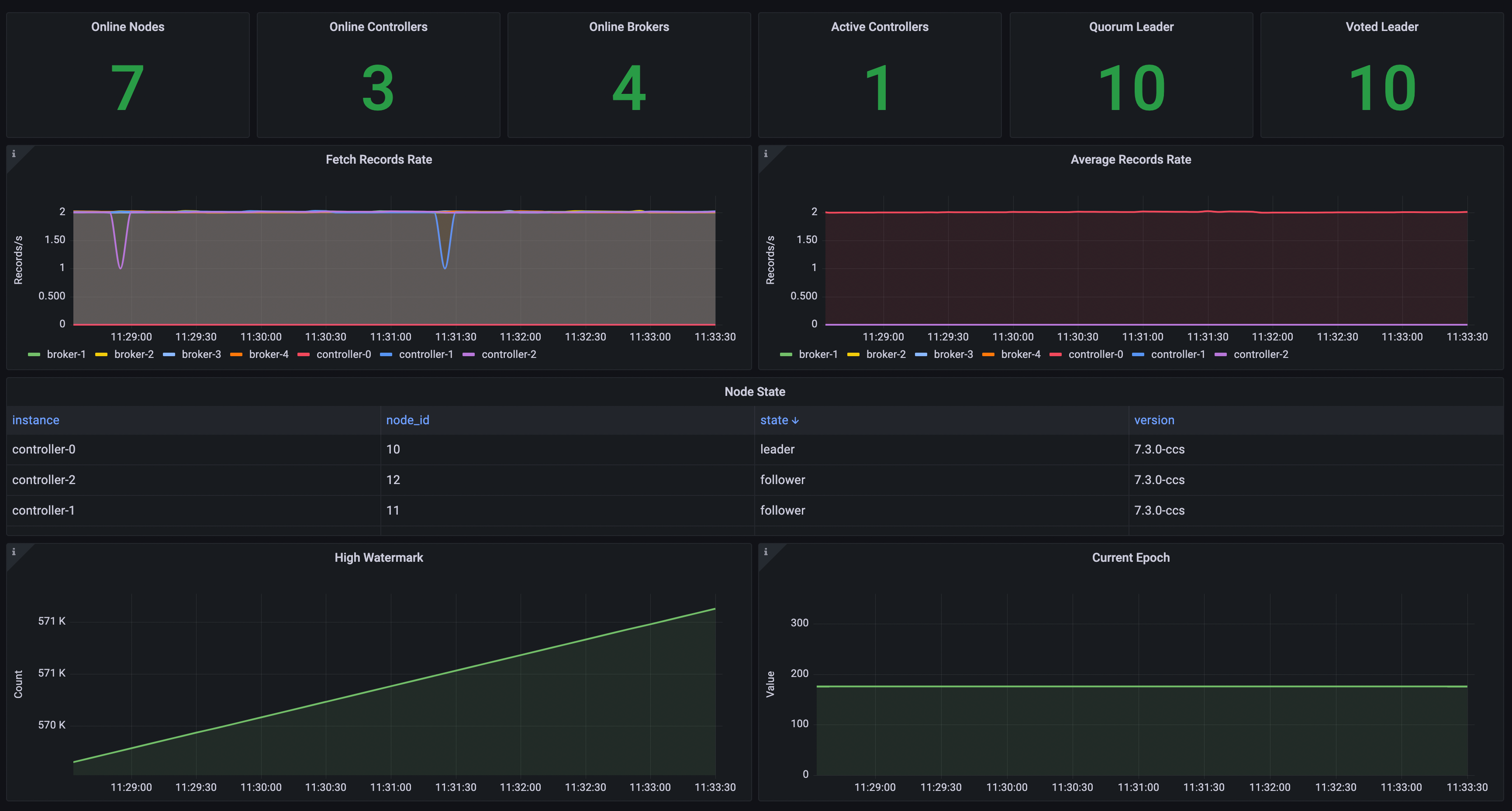

Grafana is great for custom configuration, but that means time and effort are needed to build them. Here is a dashboard around some of those raft metrics; it is not a complete dashboard.

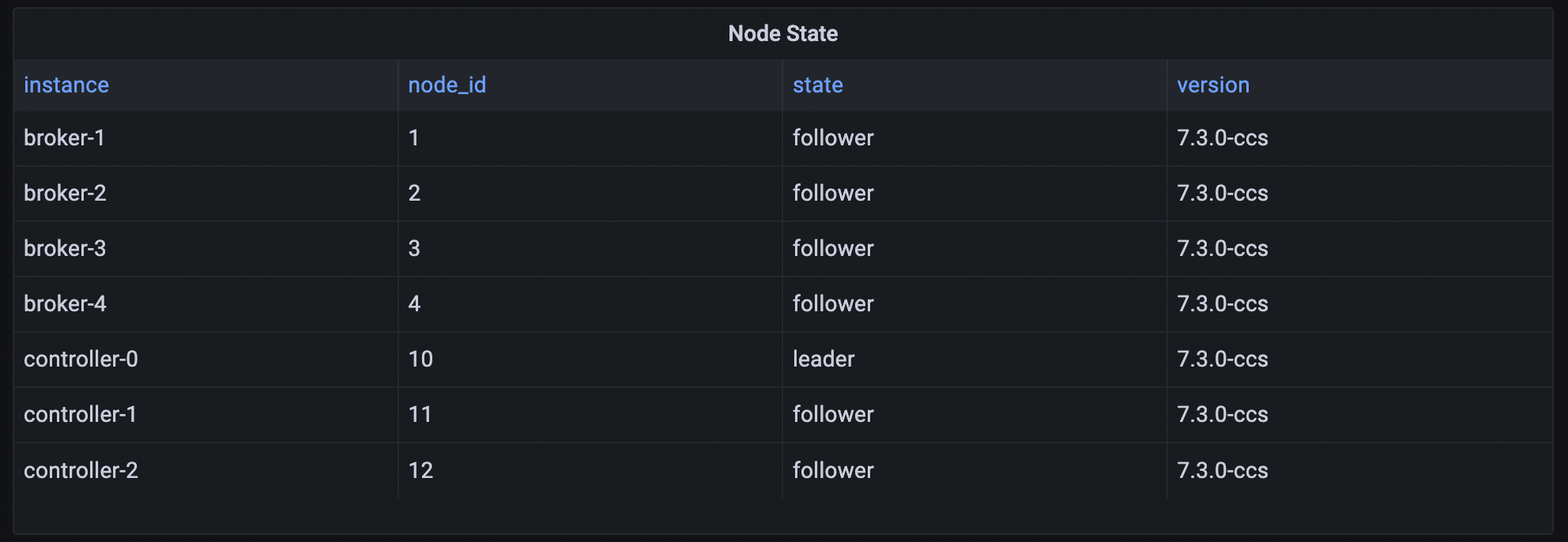

Node Information

So with metrics emitting, put them into a Grafana dashboard.

By using the current-state we can see who is the leader, in addition to capturing node.id.

In addition, the dashboard component joins in other data to provide additional information on each node.

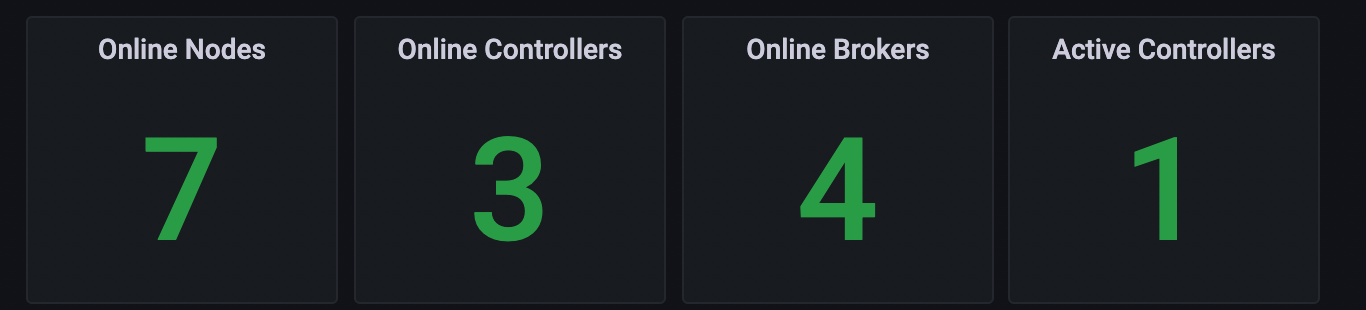

Node Counts

Counts of nodes and active controller are always re-assuring, and this leverages an existing metric, kafka_controller_kafkacontroller_value{name="ActiveControllerCount",}.

This metric only is emitted from a controller, so by counting the existence of this metric you see the number of controllers in the cluster,

and by summing the value of the metric you get the actual number of active controllers; alert if this is ever not equal to 1.

Check out the dashboard to see how the other values are calculated, as it is the same as in the zookeeper-based installations.

Active Controller

To get the node.id of the quorum leader, just find max(kafka_server_raft_metrics{name="current-leader",}).

Because scraping of each node is from slightly different times, different values are possible at the time of a change; max is used to make the single

value display easy to build.

If a new leader is being voted, that will show up in the voted leader metric. In a single value dashboard, I do not expect this to be that useful, but in a time-series history of values, more value would be in having this metric recorded.

Full Dashboard

Here is an example dashboard that also captures the fetch rate of the controller metadata.

Open-Source Tools

I have checked a variety of open-source tools out there and have been unsuccessful in seeing raft metrics. Active controller information is incorrectly displayed. None of the tools tried came to support raft, but it is important that before you upgrade, you have a proper monitoring and alerting strategy in place.

Summary

Be sure you properly test your monitoring and Apache Kafka support infrastructure as part of your move to Kafka Raft Consensus Protocol. Also, validate that kafka-raft metrics are captured and ensure that dashboards and tools work when brokers and controllers are on separate nodes.

Legacy Documentation

If you are using cp-kafka version 7.3 then additional steps are necessary to properly bootstrap your container based deployment.

With cp-kafka version 7.5 the only necessary step is to now provide the UUID for running kafka-storage and the container

will automatically run this on start-up, if the storage directory does not exist.

Container Images

With these details in mind, applying them to Confluent’s cp-kafka image takes a little finesse,

at least with version 7.3.0.

The cp-kafka container’s entry point, /etc/confluent/docker/run, builds the configuration for Apache Kafka from environment variables following conventions.

In addition, there are validation steps to catch misconfiguration.

These validations, however, need to change, since certain assumptions no longer apply in a zookeeper-less setup.

In addition, a node that is only for a controller does not define advertised.listeners so validation for that needs to be removed.

Script Modifications

The following tasks need to be done to start up these images with raft.

- Remove

KAFKA_ZOOKEEPER_CONNECTvalidation for all nodes. - Remove checking for zookeeper ready state for all nodes.

- Remove

KAFKA_ADVERTISED_LISTENERSvalidation for dedicated controller nodes. - Create Metadata store for all nodes by running

kafka-storage format.

Command

The cp-kafka image’s command is /etc/confluent/docker/run, and the scripts and docker-compose command setting, allow

these containers to start with raft consensus protocol.

volumes:

- ./broker.sh:/tmp/broker.sh

command: bash -c '/tmp/broker.sh && /etc/confluent/docker/run'

volumes:

- ./controller.sh:/tmp/controller.sh

command: bash -c '/tmp/controller.sh && /etc/confluent/docker/run'