Allowing developers, data engineers, or any one interested in real-time technologies the ability to explore tools locally is a great advantage. I have heard many stories of developers leaving containers running over the weekend, only to use to many of their free developer credits with a cloud provider. I’ve also seen developers not fully explore various configurations, nuances, or tools fearing they would have to expense unexpected charges.

Project Details

This real-time development project is available in GitHub as dev-local. All of the Kinetic Edge contributions are Apache License 2.0, but following the guidelines of each project accordingly as there are other licenses involved.

Demonstrations of streaming concepts are provided in dev-local-demos, and new projects will be added and written about as they are developed. I hope that this ecosystem can save you time in building your POC and can improve your understanding of real-time streaming.

Ecosystem

Network

A single network is created to be leveraged across each application.

This network only needs be created once, and the network.sh script, sharing a network while having separate docker-compose environments for each application makes it easy to start only what is needed.

Components are separated into their own docker-compose and share this network allowing them to work together to demonstrate real-time processing.

Apache Kafka with Confluent Schema Registry

This is the core component of this ecosystem. 4 brokers makes it easier to understand Kafka. Having 3 brokers can give a mis-conception that all brokers have a copy of all topics.

- 1 Zookeeper

- 4 Brokers

- 1 Confluent Schema Registry

If you do not need to start schema registry, a simple way to exclude it is as follows docker-compose up -d $(docker-compose config --services | grep -v schema-registry)).

Understanding and leveraging schemas are part of your Kafka deployment is a core decision to make, and that is why it is provided here as part of the kafka-core container.

It is highly recommended to have a local installation of Kafka on your laptop for leveraging the command-line interface. The confluent community edition is great in that you will have access to the ksql cli and avro console consumer and producer as well. Go to Confluent’s get-started, select the here link in the Download Confluent Platform section to get the community edition.

Kafka Connect Distributed Cluster

2 Kafka Connect Servers serving named

connect-cluster.Secrets to be used by any connectors, can be placed in

./secrets.Place connectors in

./jarsand start/restart the server.Having confluent-hub is very helpful, you can download it directly and unzip into your

/usr/local/confluentdirectory. Or on your MacOS use brew as stated on their client documentation.With

confluent-hubinstalled, most connectors can be installed easily, just be sure to create a dummy connect-distributed.properties (Kafka Connect is installed via docker and is configured for the ./jars directory).confluent-hub install --no-prompt \ --worker-configs ../tmp/connect-distributed.properties \ --component-dir ./connect/jars \ confluentinc/kafka-connect-s3:latest

ksqlDB

- Confluent’s ksqlDB is a great way to quickly see stream-based processing, even if custom applications built with Kafka Streams is in your future.

Monitoring

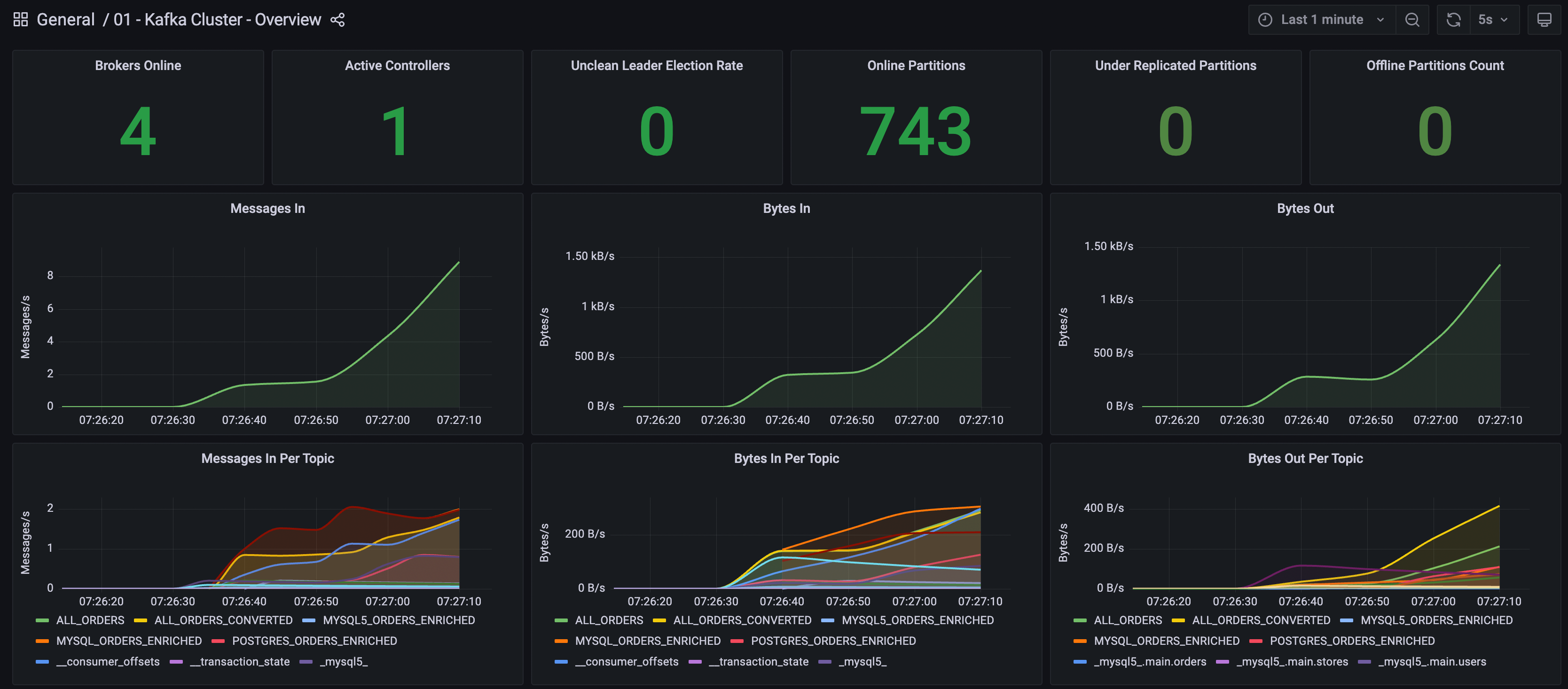

Grafana (w/Prometheus)

- A monitoring dashboard focused on providing the visualizations into the health of Apache Kafka and more.

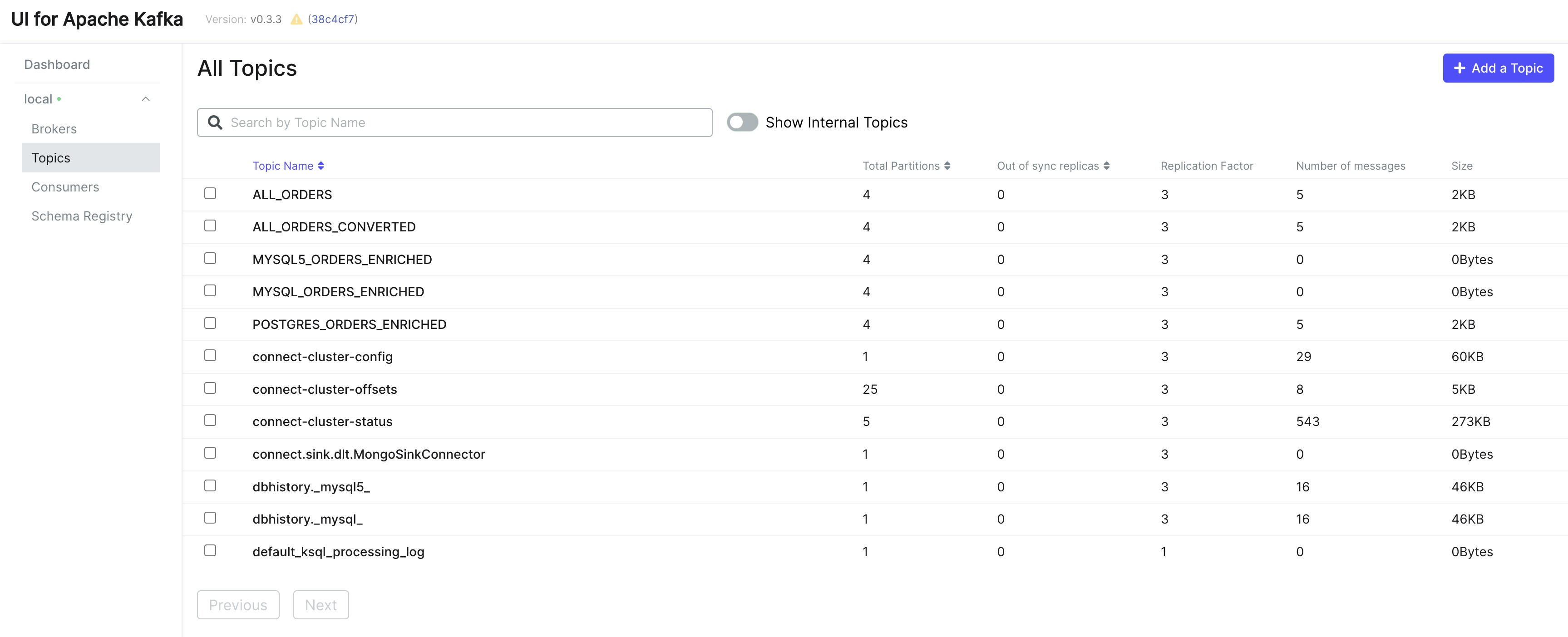

Kafka Visualization Tools

Various open-source Kafka UI tools for exploring and monitoring Apache Kafka.

Having administration tools, such as Kafka UI is a great way to visualize and observe.

Additional applications available as containers in this ecosystem.

- Apache Druid

- Apache Pinot

- Apache Cassandra

- Elasticsearch / Kibana

- MongoDB

- Minio (S3 compliant object-store)

- MySQL

- Postgres

- Oracle (licensing requires image to be built, process documented and scripts referenced)

Maintenance

Versions will be updated and the demonstrations found in dev-local-demos, will be used to validate those upgrades.

I am always interested in applications related to real-time streaming, and will be updating this project with new applications from time to time.

Reach out

Please contact us if you have improvements, want clarification, or just want to talk streaming.